Where does machine learning bias come from?

Bias creeps into machine learning (ML) systems in lots of ways. When it does that, the system becomes sub-optimal. In this post, let us look at how past data can play a role in creating/exacerbating bias. In my earlier post, we learned that the training dataset is a pool of past data that the machine uses to learn which patterns belong to what outcome group. Learning the patterns allows the machine to correctly classify new data it has not seen.

Garbage in garbage out

There are lots of ways in which the training dataset can introduce bias into the system. To keep things simple, we will focus on two: Sample bias and Stereotype bias.

Sample bias: If we trained a self-driving car to drive on British roads, it would have learned to give way to traffic already in a roundabout. If we test-drive the same car in Paris, it could cause an accident. Because, in Paris, drivers already inside a roundabout give way to traffic entering the roundabout (not sure if they still do this, but when I went to Paris many years ago, this is what I noticed at Arc de Triomphe ….really scary stuff). The idea is that the scenarios in which we train the machine should be very similar to the scenarios in which it will operate.

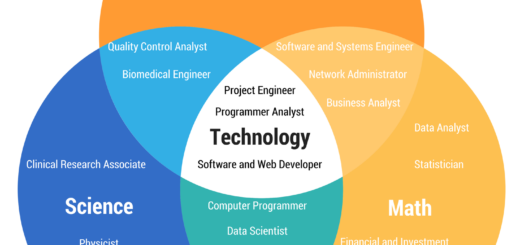

Stereotype bias: This kind of bias occurs when certain groups are under-represented in the data. In many cases, this bias reflects existing prejudices in the real world. E.g. using data with male doctors and female nurses for training is more likely to exacerbate this bias. In 2019, a group of researchers translated sentences with different job titles from several gender-neutral languages to English using Google translate. Google assigned the word “He” to a sentence with a STEM job title at a much higher rate than expected when comparing to real-world data.

In summary, if we feed in “bad” data, into the system, we are likely to get less than ideal results.

What should we do?

Data scientists need to think about the circumstances where their models will be used. Engineers who build models to predict the reliability of an aeroplane engine will take into account the climate that the aeroplane operates in. Models built on planes that fly in cooler climates will typically not be used for aeroplanes in warmer climates. The other thing data scientists need to be mindful of is covariate shift. This is when the data that the model is working on has changed over time. E.g. if we train a facial recognition application using data from younger faces, as the customers get older, the model may become less accurate. Covariate shifts may be sudden or gradual and may not be as easy to always identify.

Data scientists also need to diversify their training data. If we train a model using diverse data, it is less likely to introduce/exacerbate bias. During the testing phase, data scientists should also look at model performance metrics by sub-groups. Instead of looking at overall model accuracy, they could look instead at accuracy for males vs females.

In later posts, I will introduce some bias measures and mitigation techniques to help data scientists gain a better understanding. However, in my next post, we will continue with sources of bias. Next up, what role does the algorithm itself play in introducing/exacerbating bias?